Author: Arnav Lahiry

The acceleration of Artificial Intelligence (AI) is at the forefront of public discourse. From employees and business to governments and academics, people are hoping to understand what this advancing technology means for them, their work, and society at large. So, what do people think about AI? In this article, we examine some of the latest statistics from research studies on collective attitudes, as well as dive deeper into the opinions of CSR and social impact leaders, and what this can mean for businesses looking to responsibly harness AI for social good.

Data Sources:

Accenture: Work, workforce, workers Reinvented in the age of generative AI

Pew Research Center: Growing public concern about the role of artificial intelligence in daily life

The Alan Turing Institute: How do people feel about AI?

2024 Edelman Trust Barometer Global Report

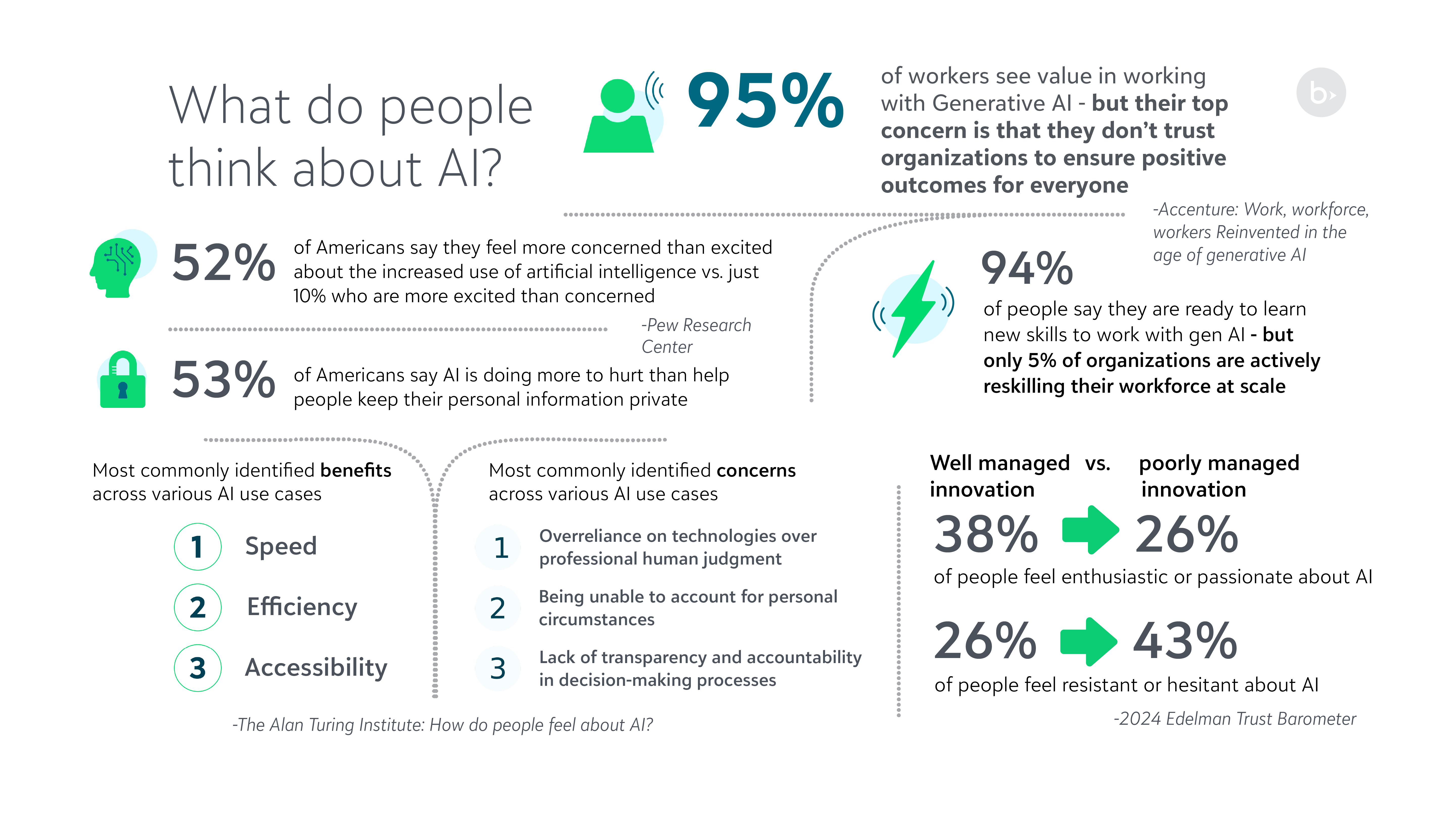

The Trust Gap

As seen above, data shows that almost all (95%) of workers see value in working with generative AI, but there is a large concern around if organizations will ensure positive outcomes for everyone. This seems to be particularly worrying given that the same research showed that three-quarters of organizations globally lack comprehensive strategies and initiatives to ensure positive employee experiences and outcomes with gen. AI. Moreover, two-thirds of CxOs surveyed confessed that they are ill-equipped to lead this change.

The 2024 Edelman Trust Barometer report found that people’s views on AI technology is highly dependent on how well the innovation is managed, with resistance and hesitance increasing 17% for poorly managed innovation compared to well managed innovation. At the same time, when compared across institutions such as NGOs, governments and media, businesses remained the highest ranked on trust. To honor this trust, companies have a duty to drive forward thoughtful and informed innovation in a manner that maintains positive outcomes to their stakeholders. It is therefore important to first acknowledge what people view as the specific benefits and concerns around AI technologies.

Most Common Benefits and Concerns with AI

According to a study by The Alan Turing Institute examining opinions across various use cases of AI, people most commonly identified speed, efficiency, and accessibility as the top benefits. On the other hand, the top concerns were around the reliance on technology over human judgement, especially due to a lack of transparency and accountability in these decision-making processes.

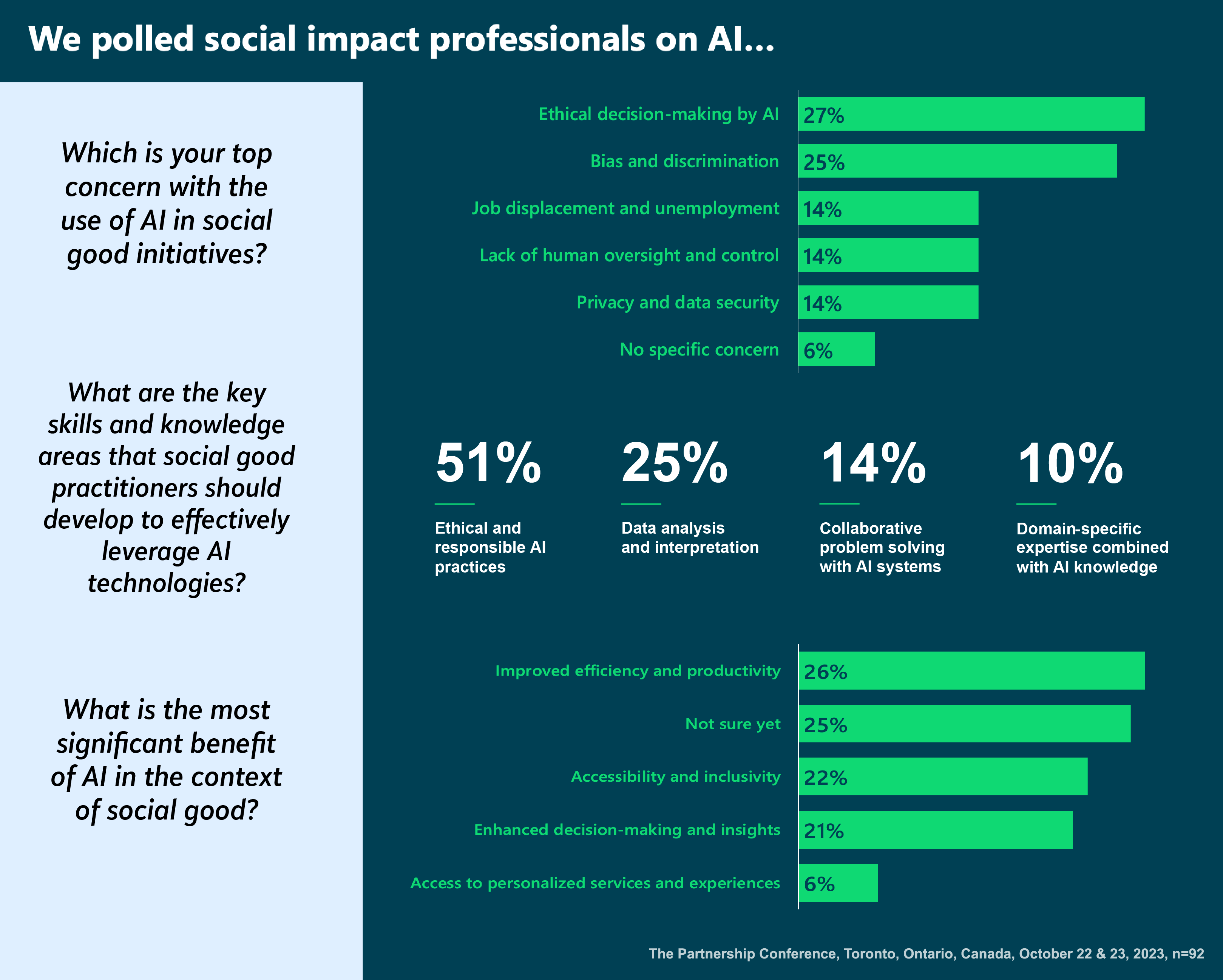

This aligned very closely with our own survey of social impact professionals conducted at The Partnership Conference in Toronto, Canada, in late-2023. As seen below, within the context of using AI for social good initiatives, those polled identified improved efficiency and productivity as the most significant benefit, while ethical decision-making was a key concern, along with the risk of bias and discrimination.

Another commonality across social impact professionals and the general public is the uncertainty around AI. For example, 25% of social impact professionals were ‘not sure yet’ what the most significant benefit of AI is in the context of social good. Similarly, public views are also still developing, as 35% to 49% of Americans say they’re not sure what impact AI is having, across eight use cases in a Pew Research Center survey.

Key Skills and Knowledge

To get a better understanding of the role that AI will play in the workplace as well as learn how to navigate potential risks and best utilize AI technologies, employees and leaders alike need to expand their skill set and grow into new proficiencies. In fact, the data shows that 94% of people say they’re ready to learn new skills to work with gen AI, but only 5% of organizations are actively reskilling their workforce at scale.

For social impact professionals, the majority of respondents (51%) selected ‘ethical and responsible AI practices’ as the key knowledge area that should be developed to effectively leverage AI technologies. With the proliferation of AI, and specifically generative AI tools, it is imperative for leaders to learn what makes for responsible and ethical AI technology.

How to Spot Responsible AI

Responsibility to Your Team

Look for AI tools that build off the talents of users, rather than displace them.

- Is it accessible and easy to use?

- Are there various opportunities to check for bias in the content being generated?

- Is the tool solving for a problem that is known, and freeing up the user for a more complex task?

Responsibility to Your Constituents

When adopting a new AI tool, you should closely read all published privacy resources and guidelines.

- Does the tool have access to proprietary company data?

- Is it sharing that information publicly?

- What processes are in place to maintain data security and privacy?

Responsibility to Your Cause

Above all, ask whether the AI tool will drive your impact forward.

- Will it enable greater social impact?

- Will it expand the reach of your programs?

- Will it free up administrative hours for team members so they can focus on other important work?

Blackbaud Impact Edge™

Interested in a responsible, AI-powered, impact reporting and storytelling solution for social impact teams of all sizes? You don’t want to miss the opportunity to learn about the newest offering from our Corporate Impact solutions, Blackbaud Impact Edge™.

By providing professionals like yourself with a centralized impact data solution, Impact Edge will help you jump-start the narrative for your social good story. Using unified data formatting to create charts, graphs, raw data analysis, and copywriting support through generative- AI, this first-of-its-kind technology is built with our commitment to making data accessible, powerful, and managed responsibly by leveraging world class privacy and protection practices. Click here to watch a quick overview video or request a demo!

You can also watch a webinar that dives into the history of AI, more data on public sentiments, practical social good uses, as well as strategies to leverage AI responsibly, by clicking the link below.